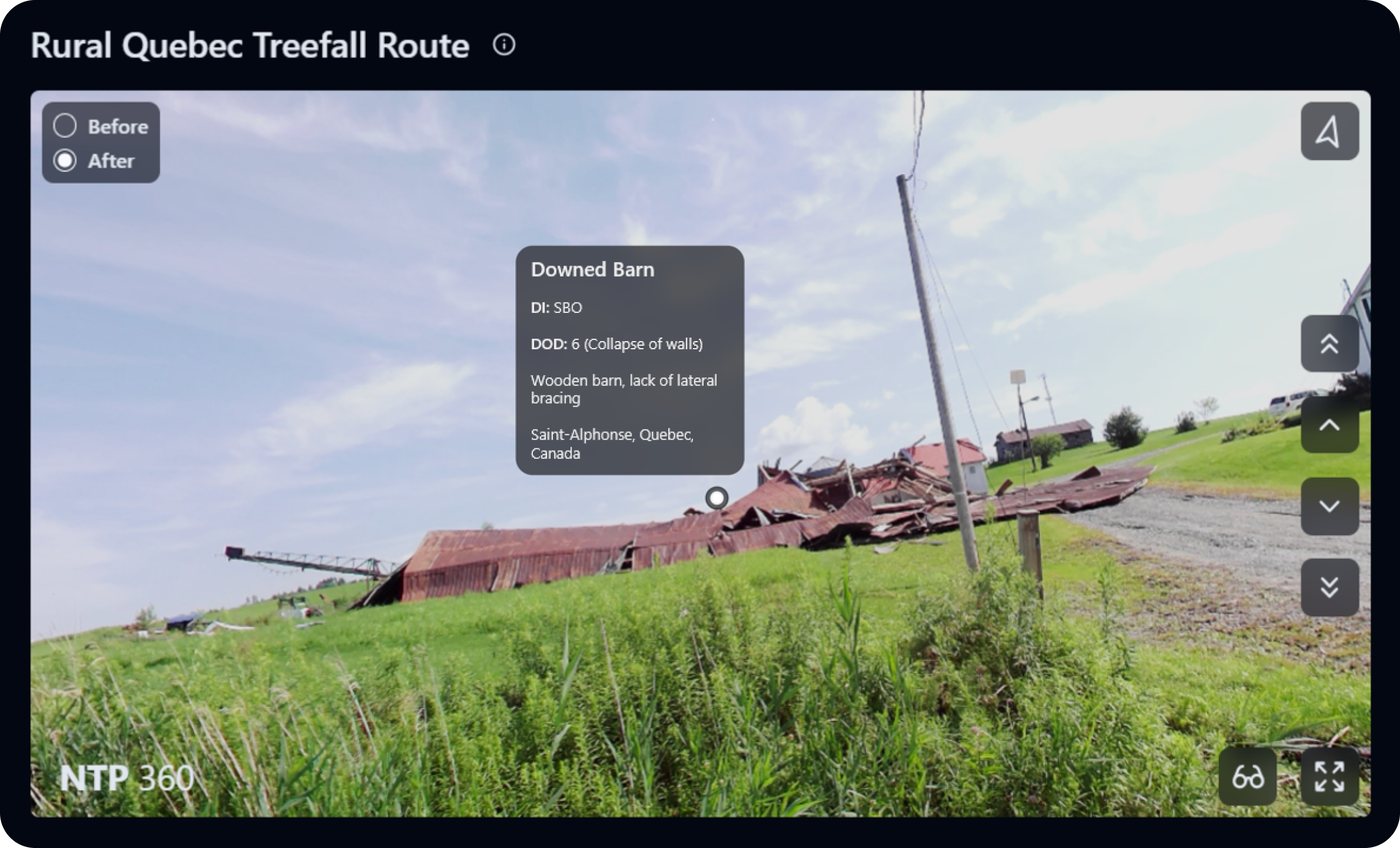

A central goal of the Northern Tornadoes Project (NTP) is to improve our understanding of severe and extreme weather and their implications for people, property, and the climate in Canada. One of the ways in which NTP compiles and expresses their damage survey findings is by creating event summary maps that detail the location, measures of damage, and a description of the storm event itself.

Event damage is also often visually documented with ground and drone photos, but what if we could experience these visual findings in the perspective of the damage surveyor, being able to navigate through and look around damaged areas? Using a 360-degree camera and some software ingenuity, this is now possible.

Enter NTP 360, a web-based tool that enables the uploading, viewing, and sharing of 360-degree panorama captures taken on storm damage surveys on-screen and in virtual reality. NTP 360 is a subset of NTP Insights, a web-based platform of software tools developed by myself and fellow research interns over Summer 2023. With NTP 360, you can zoom in on, rotate around, and jump between individual panoramas. This kind of image control offers an additional level of immersion that is absent from traditional street-level imagery, and it ensures that other researchers and the greater public share the same contextual knowledge of a storm event as the damage surveyors themselves. But how does it work?

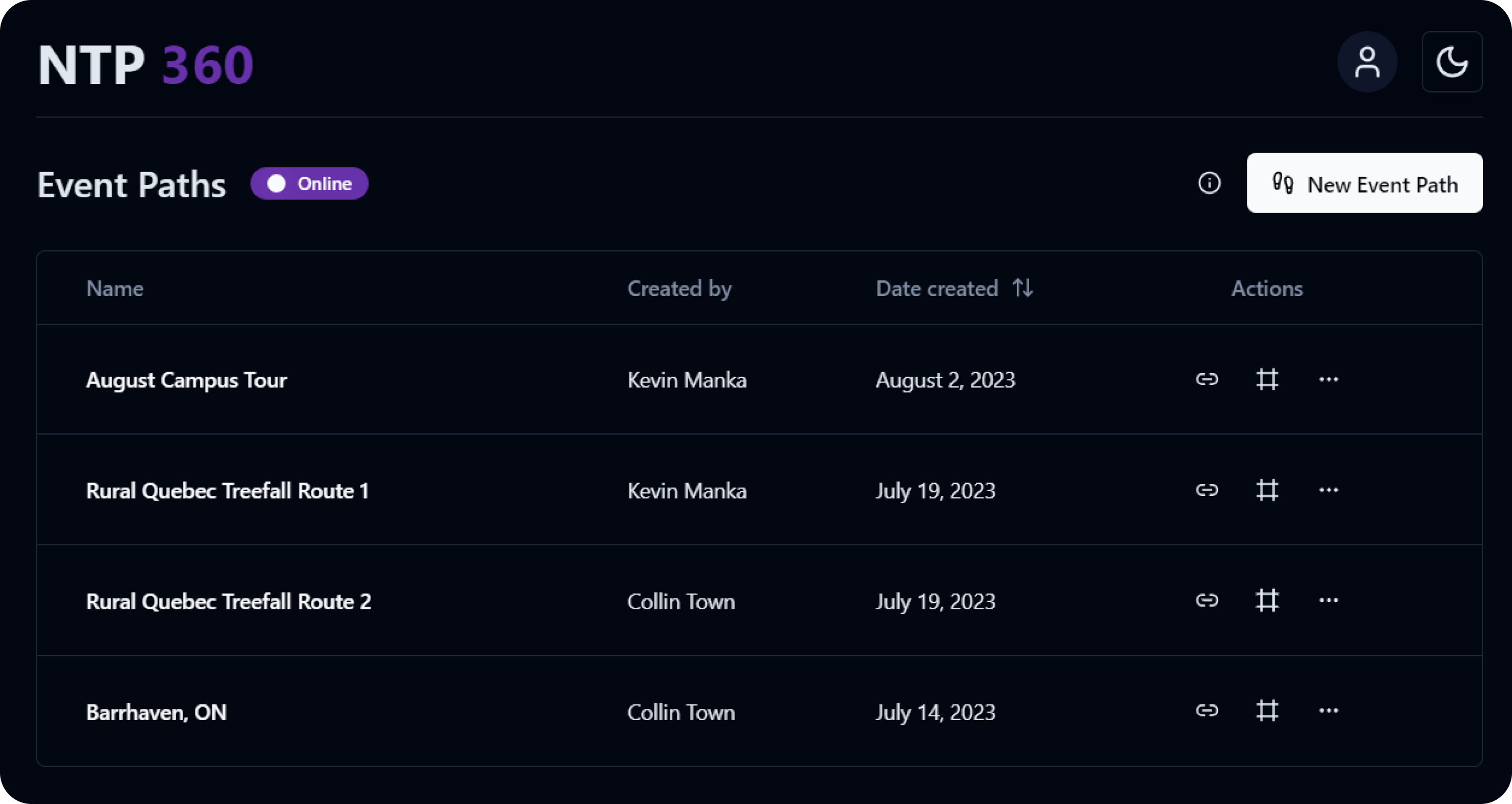

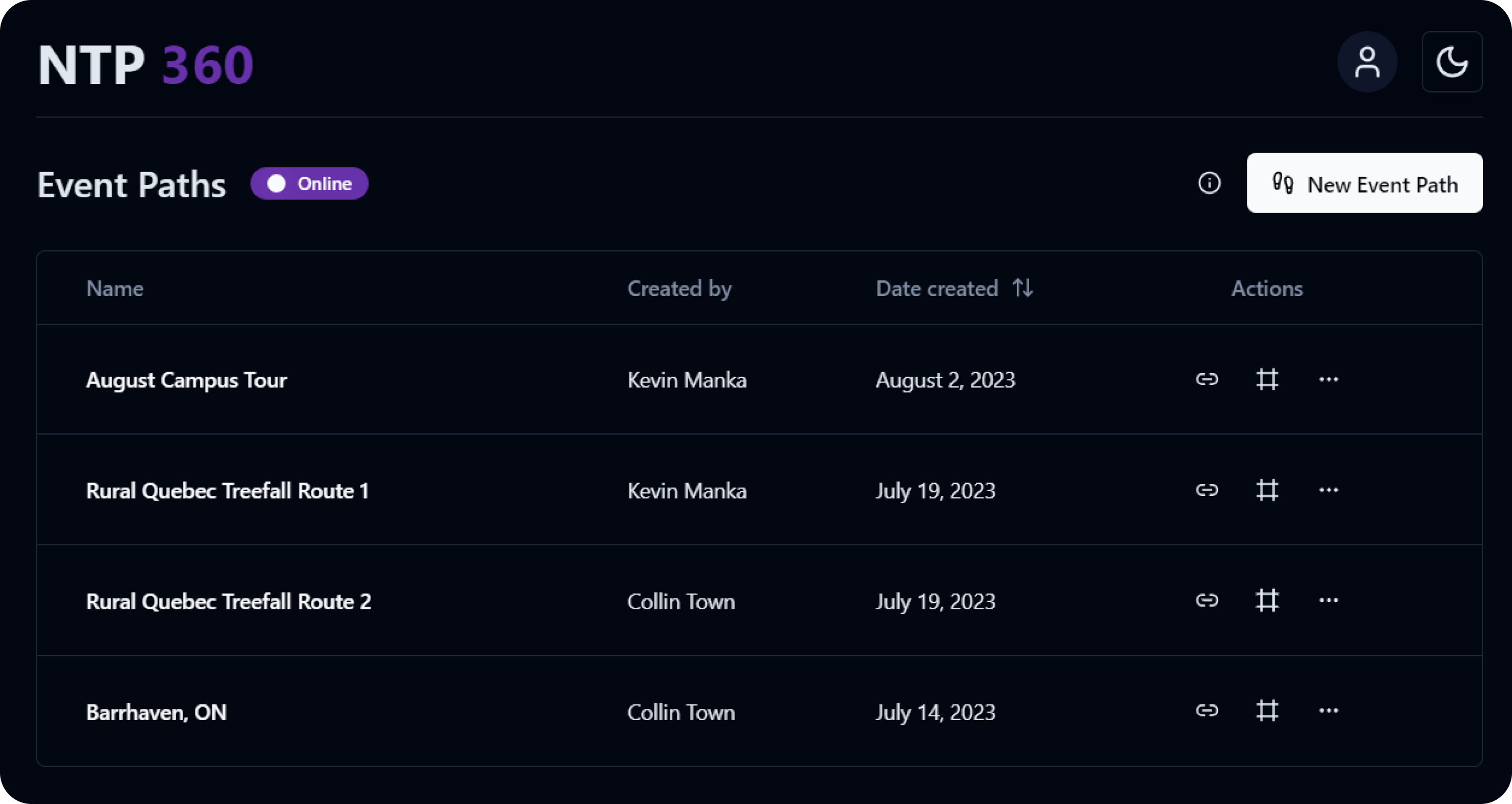

It starts with an event path dashboard made available to all authenticated NTP Insights users. Here, you can access a table of uploaded event panorama capture paths all in one place, including key event path information like the title, uploader, and creation date.

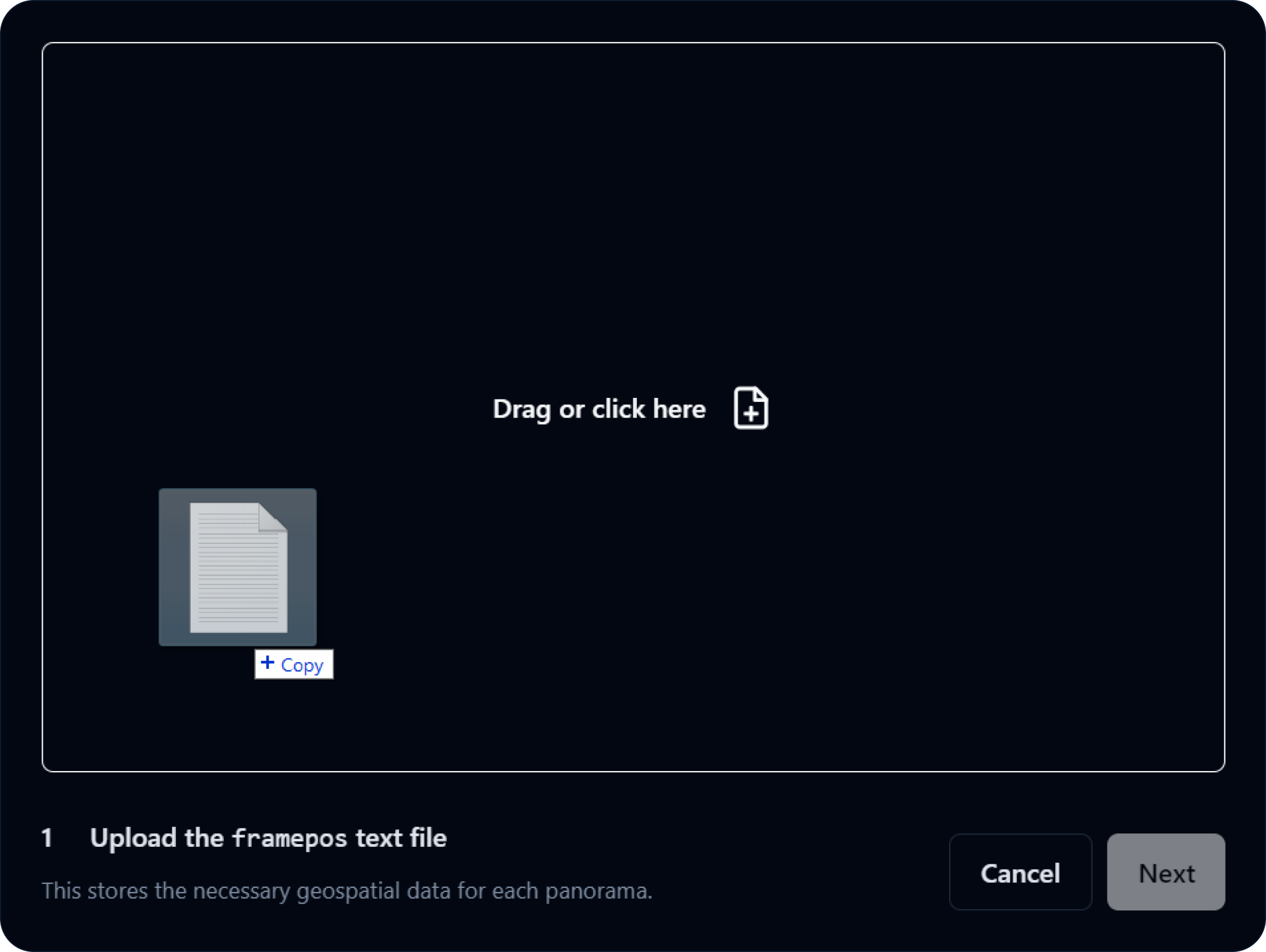

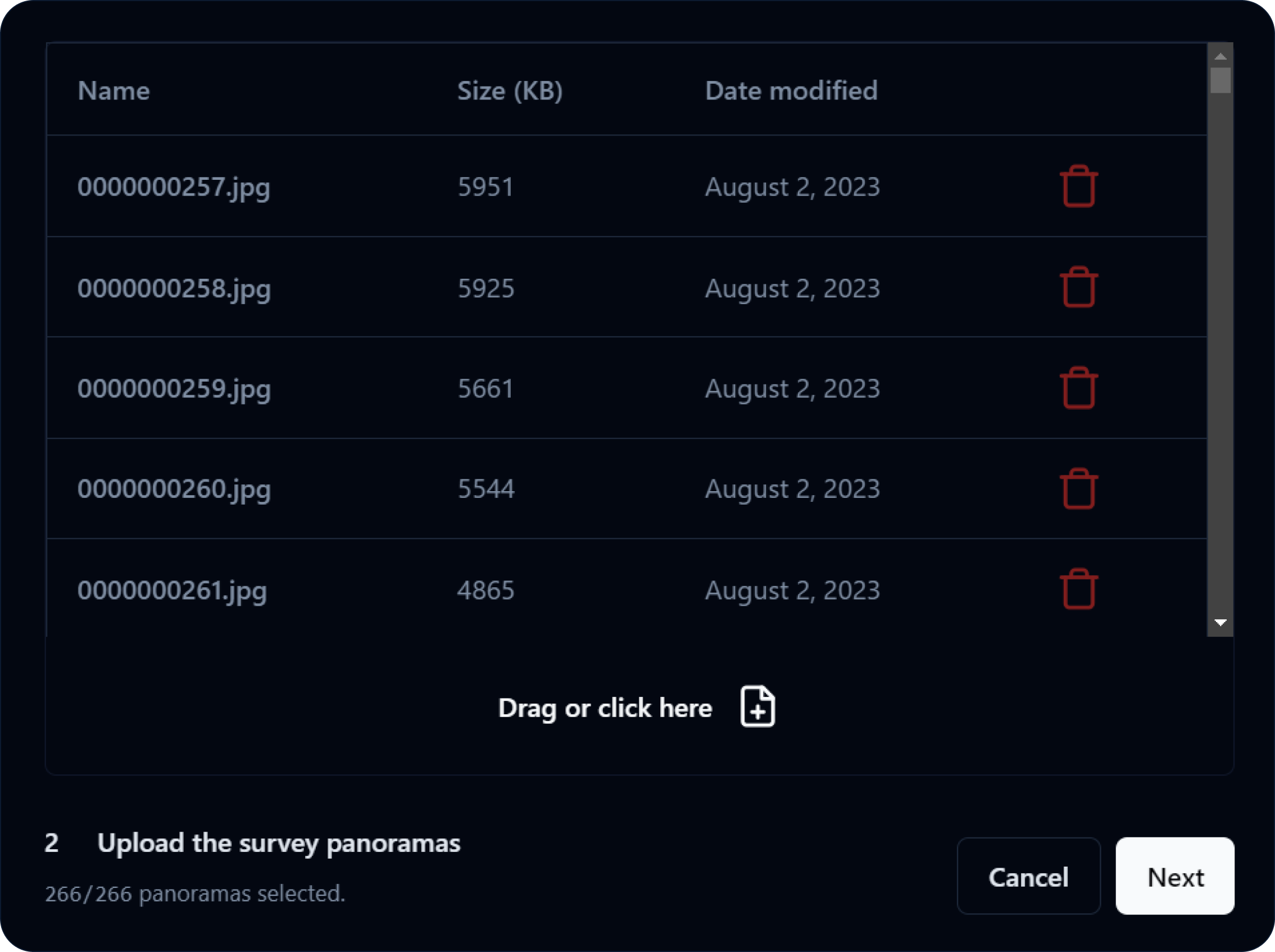

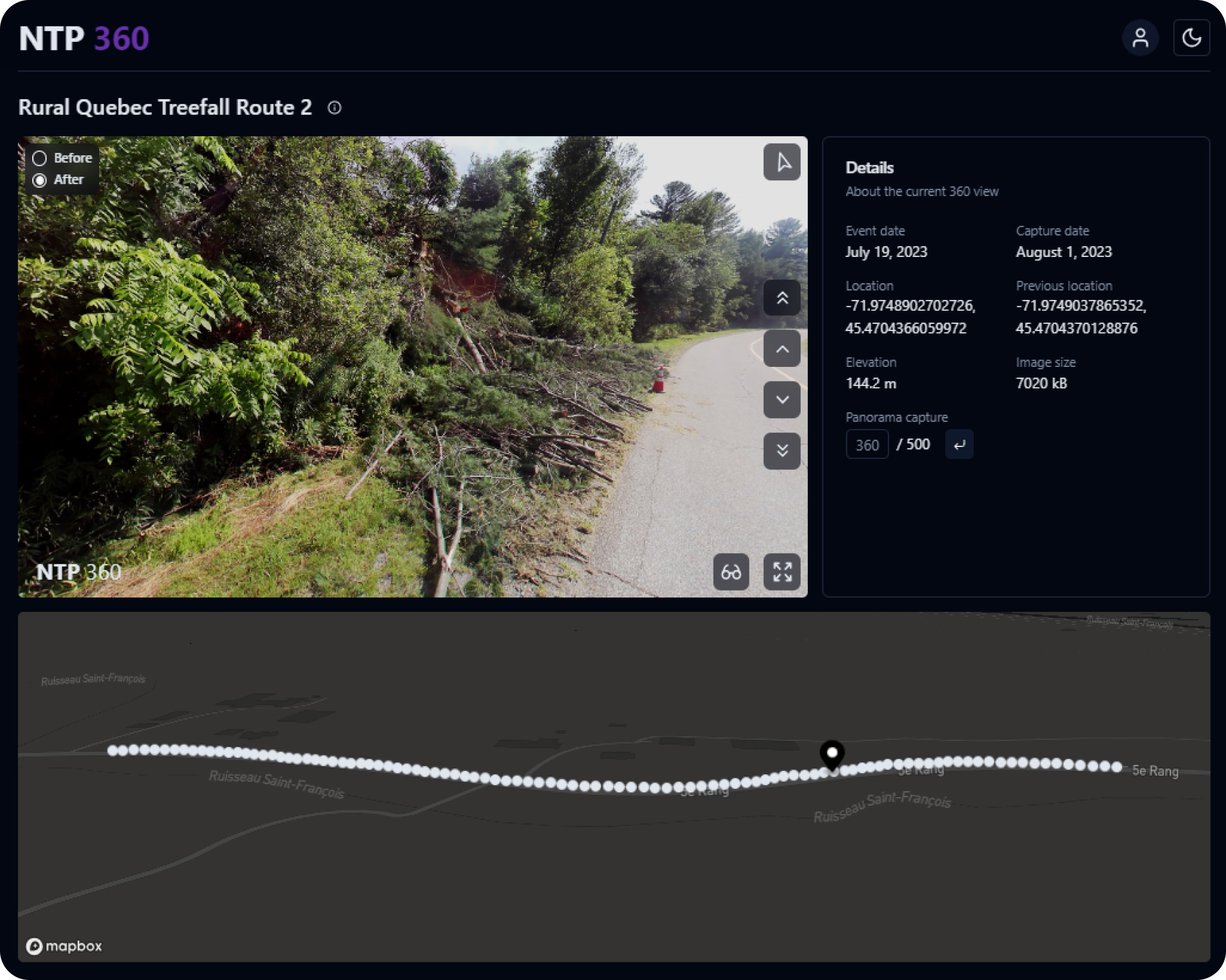

Clicking the New Event Path button opens a three-step file uploading process that begins with naming the event, creating a server folder to save to, and providing a date for when the event actually occurred. From there, the file uploading begins. NTP 360 is designed around the NCTech iSTAR Pulsar camera workflow, which includes processing raw capture data into individual panoramas. Alongside these panoramas is a framepos.txt file that stores all of the necessary geospatial data for each panorama–latitude, longitude, and altitude, just to name a few. NTP 360 parses this data and uses it to plot map points, populate a Details pane alongside the 360 view, and more.

NTP 360 then counts the number of line entries in the framepos.txt file to determine how many panoramas it expects to be uploaded by the user. Each entry corresponds to a panorama based on NCTech’s default naming scheme that names panorama files chronologically, starting with 00000000, 00000001, 00000002, and so on.

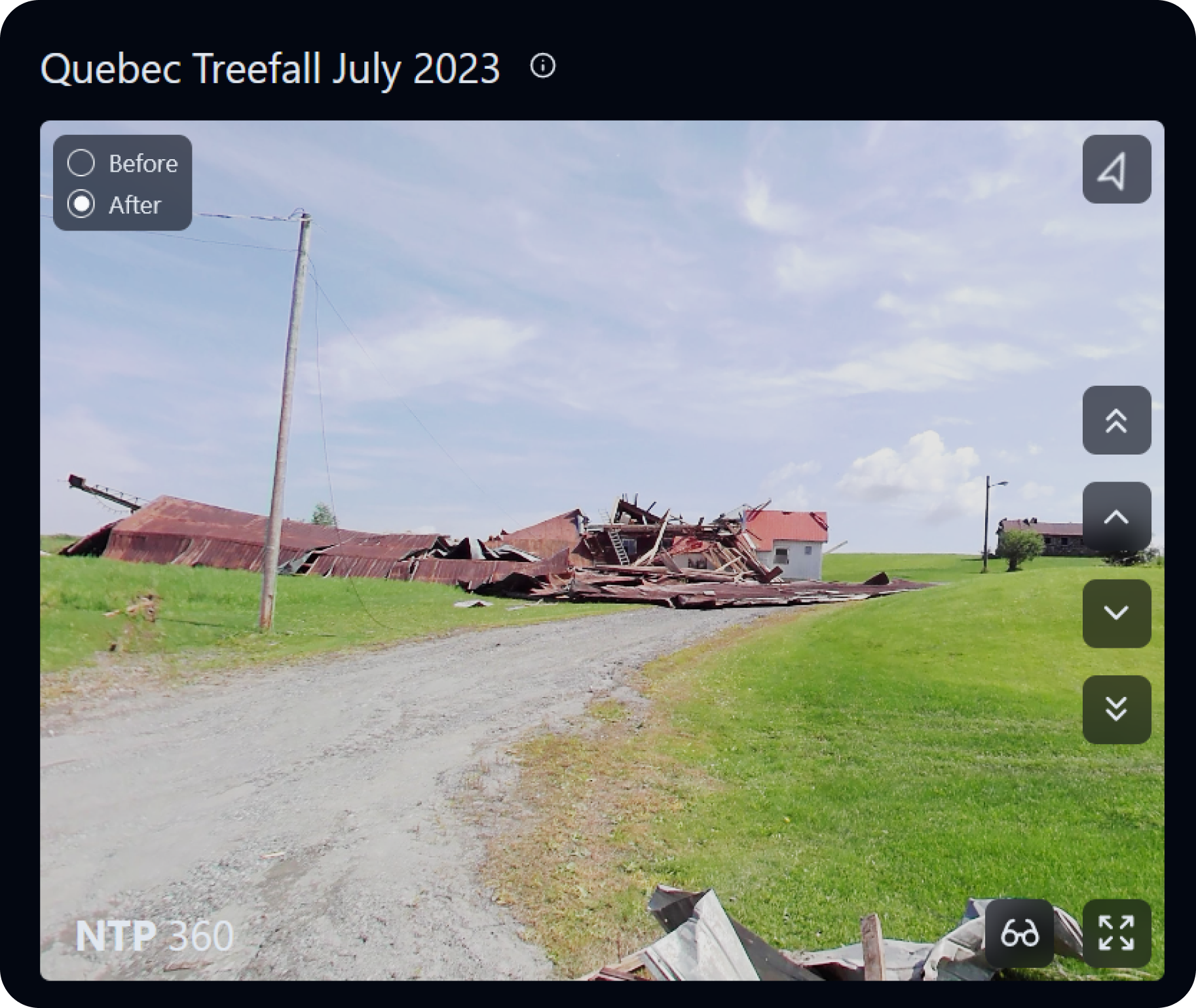

Something that notably sets NTP 360 apart from a tool like Google Street View is the ability to toggle between Before and After panoramas to directly compare the effects of severe and extreme weather events in impacted areas. In the final step of the file uploading process, NTP 360 references the latitude and longitude values for each panorama and uses the Google Street View API to identify the closest available panorama for each of the uploaded iSTAR Pulsar panoramas. A copyable or downloadable list of panorama IDs is then given to the user to be entered into Street View Download 360 for exporting.

With the upload process complete, an event path is created and ready to be shared through a public link or embedded directly into event summary maps with an inline frame of the 360 view.

NTP 360’s screen navigation controls are as straightforward as the upload process. Clicking a single chevron button or pressing the up or down arrow keys moves you to the next or previous panorama in the sequence, and clicking a double chevron button jumps you to the next or previous five panoramas in the sequence. You can also jump to a specific panorama by inputting a number in the Details pane, or by clicking one of the points plotted on the map. These refined sequence movement controls are particularly helpful for smaller-spaced sequences where adjacent panoramas are often very similar to each other.

At the top right of the 360 view is a dynamic compass button that points North relative to the current direction that you’re facing. You can click the button to recenter your camera back to North, if you so desire. At the bottom right of the 360 view is the fullscreen button, which–as the name suggests–expands the 360 view to fill the screen. You can also toggle off the overlaying buttons by pressing the H key for an uninterrupted viewing experience, and pressing the Escape key exits fullscreen mode entirely.

Most exciting, however, is the VR button that sits next to the fullscreen button. On supported devices, clicking this button thrusts you into an immersive VR mode that surrounds your environment with the current panorama. Using a VR headset like the Meta Quest Pro, you can simply move your head to look around, and the two hand controllers offer the same set of screen controls at your fingertips. Pressing the left or right trigger moves you to the previous or next panorama in the sequence, pressing the left or right grip jumps you to the previous or next five panoramas in the sequence, and pressing Y or B toggles the Before or After panorama.

Like the rest of NTP Insights, NTP 360 was built with TypeScript on the Next.js React framework. The three libraries that are central to NTP 360’s functionality are Three.js, React Three Drei, and React Three XR.

Three.js is used to create an interactable 360-degree environment through employing a spherical texture mapping of each panorama. The iSTAR Pulsar camera processes panoramas with an equirectangular projection (like most world maps!), so when they are mapped to a sphere, the true proportions of the image content remain intact. Three.js is also WebGL-enabled, allowing for GPU-accelerated processing of high-quality texture maps.

If Three.js is the eyes, then React Three Drei is the body. A CameraControls object allows the user to pivot around a texture map, but because the panorama is viewed from inside the sphere, the outside texture is inverted to normalize the reflected inside texture. However, implementing the texture map camera controls brought on many other considerations to make and hurdles to overcome. The largest of obstacles was properly aligning the Before and After panoramas as the user rotated around, such that toggling between Before and After wouldn’t change the camera position. This was ultimately achieved by using a Vector3 object that represents North, (0,1,0), and a starting angle to account for the displacement between the headings of the panorama pairs.

Finally, React Three XR enables the VR experience that hallmarks NTP 360. Based on the WebXR standard, this library has built-in VR-compatibility detection, and it powers a custom MovementControls object that responds to input from the hand controllers of a VR headset.

Beyond these three core libraries, NTP 360 also makes use of the Mapbox API to display points representing the location of the panoramas. As for the panoramas themselves, a PostgreSQL database stores an ID reference for each of the image files alongside the corresponding framepos.txt file data. Initially, uploads would fail due to the sheer size of the data that was being uploaded, so to remedy this, panorama data was batched into several smaller uploads behind the scenes.

A lot of work was put into making NTP 360 as intuitive and featureful as possible within a four-month timeframe, yet there still remain several avenues for future expansion. For one, being able to add descriptive markers directly onto individual panoramas could allow users to leave text-based insights throughout event path uploads. For example, a damaged building could be marked with a degree of damage measurement and additional damage observations about the non-captured elevations. This feature would go hand-in-hand with the ability to edit event paths at any time, including adding or removing markers as the user pleases.

For now, you can find comprehensive documentation about NTP 360 and its current feature set in the NTP Insights GitHub repository, including an additional guide on how to use the auxiliary iSTAR Pulsar and Street View Download 360 applications.